Delve 13: Let's Build a Modern ML Microservice Application - Part 7, Model Tracking and APIs with MLFlow

"Machine learning models are only as good as their deployment strategy. An unused model is just a fancy equation." - Chat GPT

Reset and Rescope

Hello data delvers! In part six of this series we containerized our application, making it portable and easy to deploy. For this part we will take a step back. Introduce machine learning (finally!), and explore how we can begin to incorporate machine learning models into our microservice ecosystem!

Machine Learning (Finally!)

This series has up until now focused on core software engineering knowledge. This is intentional, if you've read some of my previous delves, you'll know that I view machine learning grounded in good software engineering practices as essential to extract business value at scale. It is for this reason I wanted to ensure we had laid a sturdy foundation in engineering before introducing the science, but without further ado, let's dive in!

This series will not focus on machine learning fundamentals as there are plenty of good resources out there already that already cover that, however what I will touch on is how to develop machine learning models with an engineering mindset. To begin, go ahead and create an account on kaggle. Kaggle is ubiquitous in the data science world as a place to host competitive data science competitions (like the famous Zillow Prize), but it is also an invaluable resource to learn from. For this delve we will be working with the dataset provided by the House Prices - Advanced Regression Techniques learning competition so go ahead an sign up to it with your kaggle account and take a quick look at the data it provides as well as some of the submissions others have made to the competition to get a feel for what is working.

Prepare Your Project

Instead of building off our existing codebase we are going to start fresh with a brand new project (don't worry we'll be revisiting our previous codebase in a future delve!). Go ahead and initialize a starter project with uv init that will give you a basic file structure that looks something like this:

├── .git

├── .gitignore

├── .python-version

├── README.md

├── hello.py

└── pyproject.toml

You can go ahead a remove the hello.py script, we won't be using it. Now, our future project will have multiple different parts. Such as for training the model, and using the model as part of microservices. As you can probably imagine, the set of Python library dependencies we have for training the model may be different than the Python library dependencies we have when we use the model. However, any libraries we have in common throughout the different parts of the project we probably want to stay the same version. Previously this was a big headache, we could have different requirements.txt files for the different project parts to maintain different sets of dependencies, but making sure that if a dependency was re-used in multiple parts it was the same version? Good luck! Fortunately for us uv comes to the rescue here with its concept of workspaces. We can have a single uv workspace with one uv.lock file but multiple different sub-applications within it with their own dependency lists.

Let's try it out! Go ahead and create a housing-price-model directory to hold our model application. Within it create a new pyproject.toml file with the following contents:

| housing-price-model/pyproject.toml | |

|---|---|

The final trick to make this work is we have to modify the root pyproject.toml file to let uv know about this workspace member:

You can also add a project description too if you'd like. We should now have a project directory structure that looks like so:

├── .git

├── .gitignore

├── .python-version

├── README.md

├── housing-price-model

│ └── pyproject.toml

└── pyproject.toml

Next up within the housing-price-model directory create a sub-directory called data and place the dataset files from kaggle within it.

Tip

It's a good idea to add your data directory to your .gitignore so the data files don't get checked into github. Not only is it against the rules of the competition to do so, it's generally bad practice to version data files with git, there are better tools we will cover in the future for that. 🙂

Finally, create a another sub-directory called notebooks with the housing-price-model directory to hold our Jupyter notebooks for modeling. "Jupyter notebooks!?!? I though we weren't supposed to use those?" I might hear you say, and based on my previous delve you wouldn't be wrong in asking the question, however in this case I think Jupyter notebooks are useful for a few reasons:

- We are in the early phase of exploring the data and the problem and in this state the visual nature of the notebooks can be useful

- Notebooks are extremely useful when trying to teach or explain a concept, which is what I'm trying to do in this delve

- We'll be converting the model training code out of notebooks in a future delve 😉

With that we should have our final project directory structure that looks like this:

├── .git

├── .gitignore

├── .python-version

├── README.md

├── hello.py

├── housing-price-model

│ ├── data

│ │ ├── data_description.txt

│ │ ├── sample_submission.csv

│ │ ├── test.csv

│ │ └── train.csv

│ ├── notebooks

│ └── pyproject.toml

└── pyproject.toml

Build a Model

To start off with we need to install our project dependencies. First off make sure you are in the housing-price-model directory in your shell, then you can use uv add to install all of these dependencies:

- pandas - Standard library for data manipulation

- scikit-learn - The most popular library for doing machine learning in Python

- plotly - My preferred library for generating data visualizations (there a much better options out there than matplotlib!)

Also, depending on what environment you want to run your notebooks in there are some optional dependencies as well:

- jupyterlab - The standard web-based notebook interface available from jupyter

- anywidget - An extension for jupyter that enables interactive plots

Note

I will be using jupyter lab as my notebook execution environment however you can also run notebooks directly in VSCode.

Within the housing-price-model directory go ahead an fire up JupyterLab by executing jupyter lab in your shell. Within the notebooks folder create a new notebook called train_model.ipynb.

Tip

If you've never used JupyterLab before you can check out their getting started docs here!

To start off create a new cell to import our dependencies:

Note

If you'd rather follow along with a completed notebook you can do so here!

It's best practice to import all of your libraries at the top of your notebook just like a regular Python script rather than throughout the notebook itself. This way you can quickly tell at a glace the dependencies of the notebook.

Next off we can load our dataset into memory using pandas:

| Cell 2 | |

|---|---|

We can take note out test data has one less column then our training data (this makes sense since it doesn't have an actual price for each house).

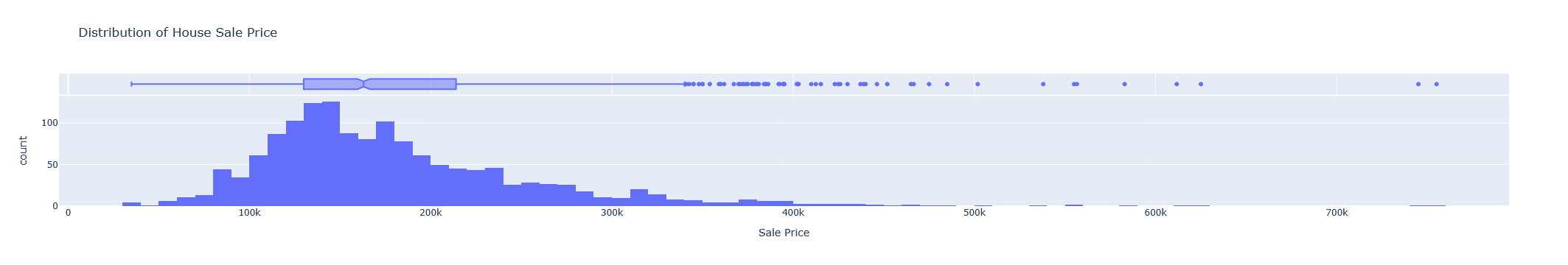

If we want to visualize our target variable (Sale Price) we can do so using Plotly like so:

| Cell 3 | |

|---|---|

This will give you a visualization that looks something like this:

Note

While the image above is a static png file, the true power of Plotly is that it is interactive! Try out the code within the notebook and hover your mouse over the graph, it will give you more details!

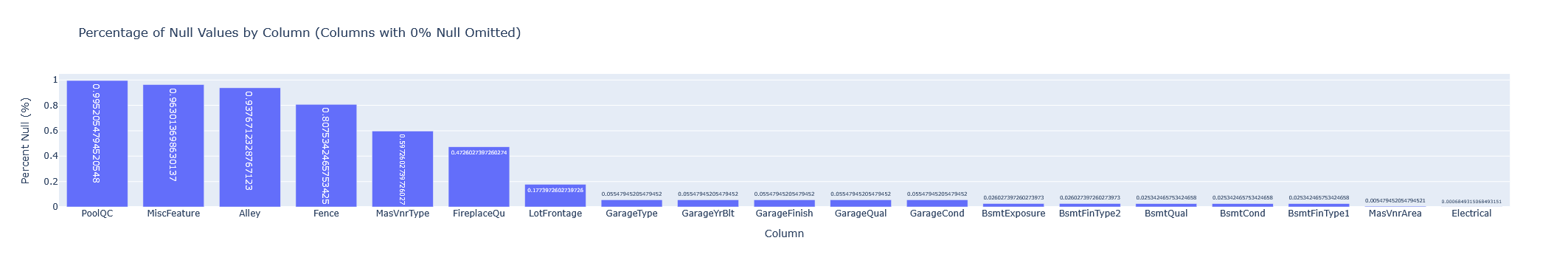

In a similar way, we can use plotly to visualize the percentage of null values in each column to identify any columns that are mostly null (it's usually good practice to drop columns that are mostly null as they won't provided much information to the model).

This will give you the following plot:

We can then drop columns with a high percentage of nulls using pandas:

| Cell 5 | |

|---|---|

We do a final bit of cleaning with pandas and form our feature and target columns:

| Cell 6 | |

|---|---|

Next we do a pretty standard train test split using scikit-learn:

| Cell 7 | |

|---|---|

We round out our data exploration with identifying the type of data in each column (namely whether they are categorical or numeric):

| Cell 8 | |

|---|---|

Feature Engineering

The next part of our notebook will focus on feature engineering. It is also where I want to spend a decent amount of time. Feature engineering is in my experience often where the breakdown between Data Scientists and ML Engineers occurs. I think a large part of this is due to how feature engineering is typically presented in tutorials (and indeed many of the notebooks submitted to this competition). It is usually viewed as a separate stage of the pipeline distinct from the model itself. In such cases you will typically see pandas being used (the ubiquitous pd.get_dummies() for encoding categorical variables for example) to transform the dataset into the features the model utilizes. This creates two issues:

- Since feature engineering can be any arbitrary python code it can become very complex and very messy

- Any feature engineering that is performed will have to be replicated in the environment where the model is used to make predictions

These issues combine to create situations where engineers must translate and replicate feature engineering logic from notebooks to their production serving environments. As I've discussed in previous delves, this process is prone to error and can create a lot of friction. However, there is a better way, and we already have all the tools to do it!

Instead of treating the feature engineering logic as a separate component, we could bundle it with the model itself. We'd have to set some constraints on what feature transformations could be performed (they couldn't call out to external APIs for example), but then we wouldn't have to worry about replicating any feature engineering logic as it would be a part of the model!

scikit-learn actually supports this concept through the use of Column Transformers & Pipelines, though it is sadly not widely publicized. For example if we wanted to replace all values missing from numeric columns with their median, and all categorical values with their most frequent value we could do the following transformation in pandas:

| Hypothetical Feature Engineering | |

|---|---|

However, now in order to use our model this logic will have to be run somewhere on the data before it is sent to the model (usually in a different layer of the application), hence an opportunity for breaking or mis-translation. This issue compounds if you are trying to test different versions of the model. What if one model version uses the median to impute missing numeric values, but another version of the model uses the mean. How do you ensure the right feature engineering logic is being applied to the right version of the model? The answer: You can't.

Instead of doing it this way though we could take advantage of the column transformers to define the logic in a bit more constrained way:

| Cell 9 | |

|---|---|

The ColumnTransformer takes in a list of tuples where the first value is a name for the transformation, the second value is a function conforming to the scikit-learn transformer signature, and the third value is a list of columns to apply the transformation on. Hopefully you can already see how this is much more structured and uniform compared to the previous code. Now you might say "That's great if I want to do a transformation built into scikit-learn, but what if it doesn't have the functionality I need?". Fortunately, scikit-learn supports creating your own transformer functions pretty easily with the FunctionTransformer or your own transformer class by inheriting from TransformerMixin so you aren't really giving up any functionality, just obeying a common signature.

We can apply one-hot encoding to our categorical features in a similar way:

| Cell 10 | |

|---|---|

Note

I go into a bit more depth on what these transformations are actually doing in the companion notebook so be sure to check it out!

Create our model:

| Cell 11 | |

|---|---|

And attach the transformations to the model by creating a Pipeline:

| Cell 12 | |

|---|---|

This will also display a visualization of the pipeline in the notebook output!

Now we can train our model with the feature engineering attached:

| Cell 13 | |

|---|---|

And make predictions on the test set with:

| Cell 14 | |

|---|---|

Notice how we didn't have to transform our data at all before we fed it into the model for predictions? That's the power of attaching our feature engineering directly to the model! Now some poor engineer doesn't have to replicate that logic anywhere and if we wanted to test different versions of the model with different feature engineering logic? No sweat, each version has the correct logic attached to it already. This simple approach of attaching feature engineering logic to models can dramatically reduce friction when deploying them and speed up time to production.

Tip

As with any software pattern, bundling feature engineering logic with the model has potential to be misused. (I've seen whole A/B tests orchestrated within bundled feature engineering logic for example). When deciding what logic to bundle with the model a good rule of thumb is if it specific to the training set the model was built on (a median value changes based on the samples in the training set for example) consider bundling it with the model. If the transformation is not related to the training set (choosing which version of the model to use for which customers for example) don't bundle it. This rule can be broken however if computing the feature on-the-fly as data is sent to the model is computationally expensive. As with all things, a balance must be found between convenience and computational practicality.

Get in the Flow with MLFlow

As with all science, the key to success is experimentation. We rarely get it right the first try, we have to try different algorithms and parameters to see what works and what doesn't for the problem we are trying to solve, there is no free lunch. Therefore, keeping track of our experiments is of paramount importance and that's where another tool, MLFlow, is particularly helpful. Backed by the same company that created Databricks, MLFlow is an all in one solution for experiment tracking and reporting, model versioning, and more. We can install it by running uv add mlflow in the root of our project.

MLFlow follows a centralized server paradigm but for example purposes we can run the server locally. Create a new directory in the project root called mlflow-server and inside an executable bash script with the following contents:

Go ahead execute the script to start MLFlow and check out the server UI, there won't be a whole lot here yet!

Tip

MLFlow will create two directories mlartifacts and mlruns when it executes. You can choose to add these to the .gitignore so they don't get checked in (and you probably should in production settings) but it means your trained model artifacts will not be checked into git.

Next we are going to be modifying our notebook to utilize MLFlow. Since MLFlow is a dependency of modeling, we can add it to the housing-price-model application by running uv add mlflow in the housing-price-model directory as well (importantly the same version of MLFlow will be used).

First we have to import MLFlow:

We also have to tell MLFlow where the server is running:

| Cell 13 (Modified) | |

|---|---|

Next we modify our training code to run under an MLFlow experiment and log the trained model along with some metrics using MLFlow:

A few thing to note here:

- The model training code is run under a

with mlflow.start_run():clause, this tells MLFlow this code will be a newrununder the experiment. - Model metrics and figures can be logged along with the model using the

log_metric()andlog_figures()functions respectively infer_signaturecan be used to auto-infer a model data contract from the training data- The model artifact itself is logged with

log_model()

Tip

If you read the MLFlow documentation you may come across the autolog feature. I've always found this feature to be buggy and unrealiable so I prefer to log experiment artifacts explicitly.

Go ahead and run the above code an check out the MLFlow UI. You should see a Housing Price Prediction experiment available with the run under it (usually with a cute autogenerated name). If you click around you should be able to view the logged metrics and figures as well as the model artifact itself. Subsequent runs will also be available here (provided the are run under the same experiment name), allowing you to easily compare different versions of the model and importantly have a record (ideally backed up on an external server) of all variations trained!

Tip

I'm just scratching the surface of MLFlow functionality, I encourage you to check out the MLFlow Docs to see all of the functionality available!

Create an API

With our model tracked in MLFlow believe it or not we have all we need to turn it into an API. One of the benefits of MLFlow is it comes with a serving layer that can host any MLFlow model as an API within a Docker container!

To take advantage of this we can create a new sub-directory under housing-price-model called deployment with the following model-build.sh script:

We can then execute it by running ./model-build.sh runs:/{RUN_ID}/model house-price-model where {RUN_ID} is the Run ID of the model training run, which you can find in the MLFlow UI.

Tip

You may need to export the location of the MLFlow tracking server to an environment variable with the following command export MLFLOW_TRACKING_URI=http://localhost:9999 in order to for it work (or add it to you .bashrc file or equivalent)

This will:

- Create a new directory called

build - Create a

Dockerfilewithin it that will define the image to host the model and its dependencies - Download the model artifact from the MLFlow server into a sub-directory calld

model_dir - Build the Docker image and tag it as

house-price-model

Note

There is a mlflow models build-docker command that will do this all in one command, but I prefer to use mlflow models generate-dockerfile and docker build separatly so I can view the intermediate build outputs in case something goes wrong.

It's hard to overstate the magic that just occured. I have seen engineers spend weeks trying to get a model artifact, with all its dependencies, with all the right versions bundled together into a container and we just did it with two commands!

We can spin up our model API pretty easily too! In the root of the project create a compose.yaml file with the following contents:

| compose.yaml | |

|---|---|

Then run docker compose up!

In order to hit out API, MLFlow expects the data in something know as dataframe_split format. Fortunately, we can easily convert our test dataset into that format by running the following snippet of code in our model training notebook:

| Model Input JSON Conversion Snippet | |

|---|---|

This will grab the first five rows of the dataset. Use your REST client of choice (I like Bruno) to put the JSON in the body of a POST request and send it to http://localhost:8080/invocations You should get something like the following output back:

| Model Output Predictions | |

|---|---|

Where each element in the array is the prediction for the corresponding row in the input dataframe. Congradulations! You now have a running model API!

Putting it all Together

Training and deploying models is the heart of MLOps and is often frought with frustation and friction, but by being intentional about the tooling we use to train our models and how we bundle the feature engineering logic they depend on we can greatly reduce the amount of overhead it takes! In the next part of this series we'll take a look at how we can integrate this deployed model into the microservices concepts we have developed before, stay tuned! Code for this part can be found here!

Delve Data

- Many challenges exist when training and deploying ML models

- By leveraging scikit-learn column transformers and pipelines we can greatly reduce the amount of feature engineering translation logic that needs to be done

- MLFlow provides a convenient framework for both tracking model experimentation and deploying model artifacts as APIs